Agents hold massive potential to transform every industry.

In this post, I tried to build my very first stateful agent that acts as a barista. The agent is built upon LangGraph, and it will provide a looping chat interface to customers where they can order beverages using complete natural language. I also built 'nodes' to represent differnt entities such as the cafe's live menu and the 'backroom' ordering system. At the heart of this application lies the ability to call functions from the Large Language Model (LLM), and that is that I want to reiterate on first.

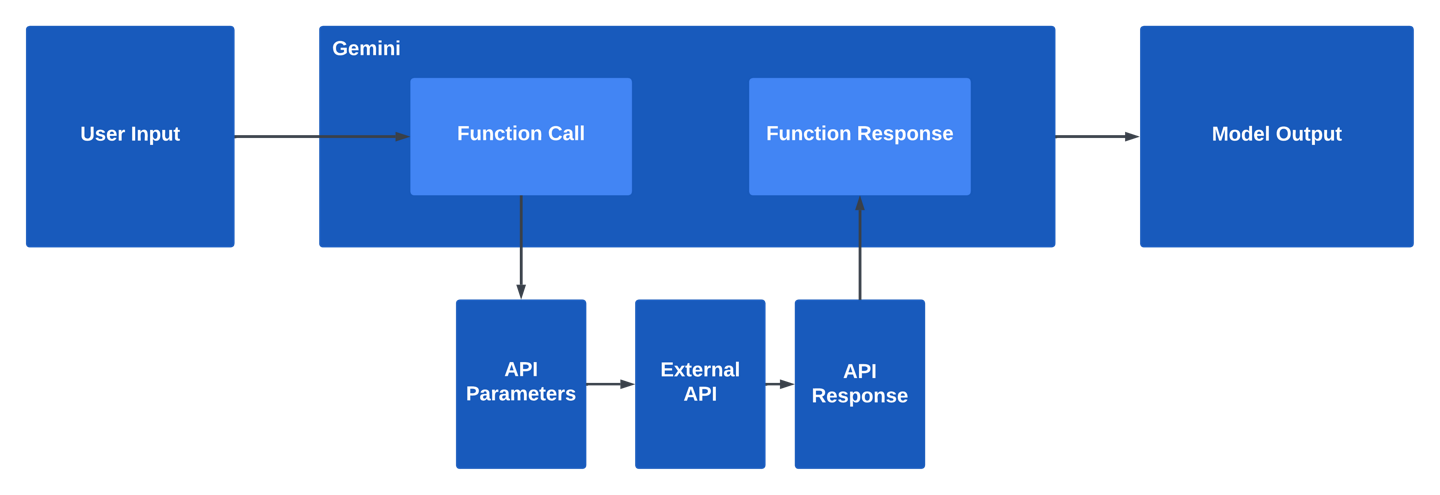

Function Calling

Function calling lets developers create a description of a function in their code, then pass that description to a language model in a request. The response from the model includes the name of a function that matches the description and the arguments to call it with. Function calling lets you use functions as tools in generative AI applications, and you can define more than one function within a single request. There are several terms with function calling:

- Function schema: JSON schemas which define the structure of the functions available for the model to call. They specify the function name, parameters, descriptions (docstrings), and expected types (type annotations). Here is an example:

{

"name": "get_weather",

"description": "Get the current weather for a given location",

"parameters": {

"type": "object",

"properties": {

"location": {

"type": "string",

"description": "The city or location to get the weather for"

}

},

"required": ["location"]

}

}-

Function call: during the conversation, if the model determines that a function should be invoked (based on the user's input, like it feels that more information should be retrieved), it will return a

function callinstead of a regular text response. -

Function response: once the client receives the function call, they can execute the actual function and return its result back to the model as a function response. The conversation ensues.

-

Automatic vs Manual Function Calling:

- Manual function calling : In this mode, the client (developer) has full control over when and how to invoke the function. You receive the

function_call, validate it, execute the function manually, and send thefunction_responseback to the model. - Automatic Function Calling : In this mode, the Python SDK (or equivalent client library) automatically invokes the function when the model requests it. This simplifies the process but should only be used when the functions are safe to execute without manual intervention (e.g., no side effects).

- Manual function calling : In this mode, the client (developer) has full control over when and how to invoke the function. You receive the

Important: The SDK converts function parameter type annotations to a format the API understands (genai.protos.FunctionDeclaration). The API only supports a limited selection of parameter types, and the Python SDK's automatic conversion only supports a subset of that: AllowedTypes = int | float | bool | str | list['AllowedTypes'] | dict

Here's how function calling works in practice:

- Define the Functions: You provide a set of functions that the model can call. For instance, a function to fetch weather data, book a flight, or query a database.

- Start the Conversation: You initiate a conversation with the model, providing the function schemas so that the model knows what functions are available.

- Model Requests a Function Call: If the user asks something like, "What's the weather in New York?", the model may respond with a

function_calllike: - Client Executes the Function: You (or the SDK) execute the

get_weatherfunction with the argument"New York"and get the result, e.g.,"Partly cloudy, 72°F". - Return the Function Response: You send the result back to the model as a

function_response. - Continue the Conversation: The model uses the function response to generate a final response to the user: "The weather in New York is partly cloudy, 72°F."

BaristaBot

Now I will employ LangGraph to define a stateful graph-based application built on top of the Gemini API. LangGraph is very customizable and could even offer memory, persistence and streaming, though I do not touch upon them yet. I am going to define an application graph that models the state transitions for the application. The app will define a state schema, and an instance of that schema is propagated through the graph.

Each node in the graph represents an action or step that can be taken. Nodes will make changes to the state in some way through code that you define. These changes can be the result of invoking an LLM, by calling an API, or executing any logic that the node defines. Each edge in the graph represents a transition between states, defining the flow of the program. Edge transitions can be fixed, for example if you define a text-only chatbot where output is always displayed to a user, you may always transition from chatbot -> user. The transitions can also be conditional, allowing you to add branching (like an if-else statement) or looping (like for or while loops).

State is a fundamental concept for a LangGraph app. A state object is passed between every node and transition in the app. Here, OrderState holds the conversation history, a structured order, and a flag indicating whether the customer has finished placing their order.

Here, the system instruction defines the main behaviors of the chatbot as well as rules for when to call different functions, rules for the conversation, like tone or what is permitted to discuss. Remember that the agent will return those function_calls and then automatically call those functions via @tool, which we will explore later.

class OrderItem(BaseModel):

"""Represents an item in the customer's order."""

item: str = Field(description="The name of the item.")

modifiers: List[str] = Field(default_factory=list, description="A list of modifiers for the item.")

quantity: int = Field(default=1, description="The quantity of the item.")

class OrderState(TypedDict):

"""State representing the customer's order conversation."""

# This preserves the conversation history

messages: Annotated[list, add_messages]

# The customer's in-progress order.

order: List[OrderItem]

# Flag indicating that the order is placed and completed.

finished: bool

# The system instruction defines how the chatbot is expected to behave and includes rules for when to call different functions, as well as rules for the conversation, such as tone and what is permitted for discussion.

BARISTABOT_SYSINT = (

"system", # 'system' indicates the message is a system instruction.

"You are a BaristaBot, an interactive cafe ordering system. A human will talk to you about the available products you have and you will answer any questions about menu items (and only about menu items - no off-topic discussion, but you can chat about the products and their history). "

"The customer will place an order for 1 or more items from the menu, which you will structure and send to the ordering system after confirming the order with the human."

"Add items to the customer's order with add_to_order, and reset the order with clear_order."

"While conversing, you can also inform customers of the total price they have to pay yet."

"To see the contents of the order so far, call get_order (this is shown to you, not the user)"

"Always confirm_order with the user (double-check) before calling place_order. Calling confirm_order will display the order items to the user and returns their response to seeing the list. Their response may contain modifications."

"Always verify and respond with drink and modifier names from the MENU before adding them to the order."

"If you are unsure a drink or modifier matches those on the MENU, ask a question to clarify or redirect."

"You only have the modifiers listed on the menu."

"Once the customer has finished ordering items, Call confirm_order to ensure it is correct then make any necessary updates and then call place_order. Once place_order has returned, thank the user and say goodbye!",

)

# This is the message with which the system opens the conversation.

WELCOME_MSG = "Welcome to the BaristaBot cafe. Type `q` to quit. How may I serve you today?"Chatbot node

To really illustrate how LangGraph works, the following program defines a chatbot node that will execute a single turn in a chat conversation using the instructions supplied. Each node in the graph operates on the state object. The state (a Python dictionary) is passed as a parameter into the node (a function) and the new state is returned. It is like state = node(state). The add_messages annotation on OrderState.messages indicates that messages are appended instead of replaced.

llm = ChatGoogleGenerativeAI(model="gemini-1.5-flash-latest")

def chatbot(state: OrderState) -> OrderState:

"""The chatbot itself. A simple wrapper around the model's own chat interface."""

message_history = [BARISTABOT_SYSINT] + state["messages"]

return {"messages": [llm.invoke(message_history)]}

graph_builder = StateGraph(OrderState)

graph_builder.add_node("chatbot", chatbot)

graph_builder.add_edge(START, "chatbot")

chat_graph = graph_builder.compile()The human node will display the last message from the LLM to the user, and then prompt them for their next input. Here, this has been simplified to the print and input functions, but in a real-world situation, we could definitely render the chat to a display or audio, and then accept input from a mic or on-screen keyboard.

In addition, the chatbot node function has also been updated to include the welcome message.

Human node

def human_node(state: OrderState) -> OrderState:

"""Display the last model message to the user, and receive the user's input."""

last_msg = state["messages"][-1]

print("Model:", last_msg.content)

user_input = input("User: ")

# If it looks like the user is trying to quit, flag the conversation as over.

if user_input in {"q", "quit", "exit", "goodbye"}:

state["finished"] = True

return state | {"messages": [("user", user_input)]}

def chatbot_with_welcome_msg(state: OrderState) -> OrderState:

"""The chatbot itself. A wrapper around the model's own chat interface."""

if state["messages"]:

new_output = llm.invoke([BARISTABOT_SYSINT] + state["messages"])

else:

new_output = AIMessage(content=WELCOME_MSG)

return state | {"messages": [new_output]}

# Start building a new graph.

graph_builder = StateGraph(OrderState)

graph_builder.add_node("chatbot", chatbot_with_welcome_msg)

graph_builder.add_node("human", human_node)

graph_builder.add_edge(START, "chatbot")

graph_builder.add_edge("chatbot", "human")Please note that if we added an edge from human back to chatbot, the graph would enter an infinite cycle loop as there is no exit condition. To fix this issue, we introduce a conditional edge. This is similar to a regular graph transition, except a custom function is called to determine which edge to traverse next. Conditional edge functions take the state as input, and return a string representing the name of the node to which it will transition.

def maybe_exit_human_node(state: OrderState) -> Literal["chatbot", "__end__"]:

"""Route to the chatbot, unless it looks like the user is exiting."""

if state.get("finished", False):

return END

else:

return "chatbot"

graph_builder.add_conditional_edges("human", maybe_exit_human_node)

chat_with_human_graph = graph_builder.compile()

Image(chat_with_human_graph.get_graph().draw_mermaid_png())Live Menu

BaristaBot currently has no awareness of the available items at the cafe, so naturally it will come up with (hallucinate) its own menu. One simple method is to hard-code a menu into the system prompt (which is what I did in this post). However, to simulate real world where the agent is responsive to fluctuating stock supply levels, the menu will be put into a custom tool.

There are two types of tools that this system will use. Stateless tools that can be run automatically, and stateful tools that modify the order. The get_menu tool is stateless, in that it does not make any changes to the live order, so it can be called automatically.

In a LangGraph app, you can annotate Python functions as tools by applying the @tools annotation. Remember that functions annotated with @tool MUST NOT update the conversation state.

@tool

def get_menu() -> str:

"""Provide the latest up-to-date menu."""

# Note that this is just hard-coded text, but you could connect this to a live stock database, or you could use Gemini's multi-modal capabilities and take live photos of your cafe's chalk menu or the products on the counter and assmble them into an input.

return """

MENU:

Coffee Drinks:

Espresso - $3.00

Americano - $3.50

Cold Brew - $4.00

Coffee Drinks with Milk:

Latte - $4.50

Cappuccino - $4.00

Cortado - $4.25

Macchiato - $3.75

Mocha - $4.75

Flat White - $4.50

Tea Drinks:

English Breakfast Tea - $3.00

Green Tea - $3.00

Earl Grey - $3.25

Tea Drinks with Milk:

Chai Latte - $4.25

Matcha Latte - $4.75

London Fog - $4.00

Other Drinks:

Steamer - $3.50

Hot Chocolate - $4.00

Modifiers (some may have an extra charge):

Milk options: Whole, 2%, Oat, Almond, 2% Lactose Free (default: whole)

Espresso shots: Single, Double, Triple, Quadruple (default: Double)

Caffeine: Decaf, Regular (default: Regular)

Hot-Iced: Hot, Iced (default: Hot)

Sweeteners: Vanilla, Hazelnut, Caramel, Chocolate, Sugar-free Vanilla

Special requests: Extra hot, One pump, Half caff, Extra foam, etc.

Soy milk is out of stock today.

"""In order to add the tool to the graph, we will wrap the get_menu into the ToolNode that is responsible for calling the tool and passing the response as a message through the graph. Secondly, the tools are also integrated into the llm object so that the underlying model knows they exist. We will also the make the chatbot node aware of the tools implemented.

tools = [get_menu]

tool_node = ToolNode(tools)

llm_with_tools = llm.bind_tools(tools)

def maybe_route_to_tools(state: OrderState) -> Literal["tools", "human"]:

"""Route between human or tool nodes, depending if a tool call is made."""

if not (msgs := state.get("messages", [])):

raise ValueError(f"No messages found when parsing state: {state}")

# Only route based on the last message.

msg = msgs[-1]

# When the chatbot returns tool_calls, route to the "tools" node.

if hasattr(msg, "tool_calls") and len(msg.tool_calls) > 0:

return "tools"

else:

return "human"

def chatbot_with_tools(state: OrderState) -> OrderState:

"""The chatbot with tools. A simple wrapper around the model's own chat interface."""

defaults = {"order": [], "finished": False}

if state["messages"]:

new_output = llm_with_tools.invoke([BARISTABOT_SYSINT] + state["messages"])

else:

new_output = AIMessage(content=WELCOME_MSG)

# Set up some defaults if not already set, then pass through the provided state, overriding only the "messages" field.

return defaults | state | {"messages": [new_output]}

graph_builder = StateGraph(OrderState)

# Add the nodes, including the new tool_node.

graph_builder.add_node("chatbot", chatbot_with_tools)

graph_builder.add_node("human", human_node)

graph_builder.add_node("tools", tool_node)

# Chatbot may go to tools, or human.

graph_builder.add_conditional_edges("chatbot", maybe_route_to_tools)

# Human may go back to chatbot, or exit.

graph_builder.add_conditional_edges("human", maybe_exit_human_node)

# Tools always route back to chat afterwards.

graph_builder.add_edge("tools", "chatbot")

graph_builder.add_edge(START, "chatbot")

graph_with_menu = graph_builder.compile()Order node

To build up an order during the chat conversation, you will need to update the state to track the order, and provide simple tools that update this state. These need to be explicit as the model should not directly have access to the apps internal state, or it risks being manipulated arbitrarily.

The ordering tools will be added as stubs in a separate node so that you can edit the state directly. Using the @tool annotation is a handy way to define their schema, so the ordering tools below are implemented as empty Python functions.

As mentioned above, functions annotated with @tool are prohibited from modifying the state so we have to implement separate nodes (functions) to handle the state updates. The actual implementations have been deferred to the order_node, which we as developers exert total control over.

# Define tool schemas using Pydantic models

class AddToOrderInput(BaseModel):

drink: str = Field(description="The name of the drink to add to the order.")

modifiers: List[str] = Field(default_factory=list, description="A list of modifiers for the drink (e.g., sugar, milk).")

quantity: int = Field(default=1, description="The quantity of the drink to add.")

@tool(args_schema=AddToOrderInput)

def add_to_order(drink: str, modifiers: Iterable[str] = [], quantity: int= 1) -> str:

"""Adds the specified drink to the customer's order, including any modifiers and quantity.

Returns:

The updated order in progress.

"""

###

class RemoveItemsInput(BaseModel):

item_name: str = Field(description="The name of the item to remove from the order.")

@tool(args_schema=RemoveItemsInput)

def remove_items(item_name: str) -> str:

"""Removes the specified item from the customer's order."""

pass

###

class ConfirmOrderInput(BaseModel):

pass

@tool(args_schema=ConfirmOrderInput)

def confirm_order() -> str:

"""Asks the customer if the order is correct."""

pass

###

class GetOrderInput(BaseModel):

pass

@tool(args_schema=GetOrderInput)

def get_order() -> str:

"""Returns the user's order so far."""

pass

###

class ClearOrderInput(BaseModel):

pass

@tool(args_schema=ClearOrderInput)

def clear_order():

"""Removes all items from the user's order."""

pass

###

class PlaceOrderInput(BaseModel):

pass

@tool(args_schema=PlaceOrderInput)

def place_order() -> int:

"""Sends the order to the barista for fulfillment."""

pass

def get_order_summary(state: OrderState) -> str:

if not state["order"]:

return "(no order)"

return "\n".join(

f'{item.quantity}x {item.item} ({", ".join(item.modifiers) if item.modifiers else "no modifiers"})'

for item in state["order"]

)

def order_node(state: OrderState) -> OrderState:

"""The ordering node. This is where the order state is manipulated."""

# Extract the latest message and current order from the state

tool_msg = state.get("messages", [])[-1]

order = state.get("order", [])

outbound_msgs = []

order_placed = False

# Process each tool call in the latest message

for tool_call in tool_msg.tool_calls:

try:

if tool_call["name"] == "add_to_order":

order.append(OrderItem(

item=tool_call["args"]["drink"],

modifiers=tool_call["args"].get("modifiers", []),

quantity=tool_call["args"].get("quantity", 1),

))

response = f"Added {tool_call['args']['quantity']}x {tool_call['args']['drink']} to your order.\n{get_order_summary(state)}"

elif tool_call["name"] == "remove_items":

item_name = tool_call["args"]["item_name"]

removed_count = sum(1 for item in order if item.item.lower() == item_name.lower())

order = [item for item in order if item.item.lower() != item_name.lower()]

if removed_count > 0:

response = f"Removed {removed_count} instance(s) of {item_name} from your order.\n{get_order_summary(state)}"

else:

response = f"No instances of {item_name} found in your order."

elif tool_call["name"] == "confirm_order":

response = (

"Please confirm your order:\n"

f"{get_order_summary(state)}\n"

"Is this correct? (Yes/No)"

)

elif tool_call["name"] == "get_order":

response = f"Your current order:\n{get_order_summary(state)}"

elif tool_call["name"] == "clear_order":

order = []

response = "Your order has been cleared."

elif tool_call["name"] == "place_order":

order_text = get_order_summary(state)

print("Sending order to kitchen!")

print(order_text)

order_placed = True

response = f"Your order has been placed! Estimated wait time: {randint(1, 5)} minutes."

else:

# Handle unknown tool calls

raise NotImplementedError(f"Unknown tool call: {tool_call['name']}")

except Exception as e:

# Catch any errors during tool call processing and return an error message

response = f"An error occurred while processing your request: {str(e)}"

# Record the tool results as tool messages

outbound_msgs.append(

ToolMessage(

content=response,

name=tool_call["name"],

tool_call_id=tool_call["id"],

)

)

# Update the state with the new messages, order, and finished flag

return {

"messages": outbound_msgs,

"order": order,

"finished": order_placed

}

def maybe_route_to_tools(state: OrderState) -> str:

"""Route between chat and tool nodes if a tool call is made."""

if not (msgs := state.get("messages", [])):

raise ValueError(f"No messages found when parsing state: {state}")

msg = msgs[-1]

# Check if the order is finished

if state.get("finished", False):

# Terminate the conversation

return END

elif hasattr(msg, "tool_calls") and len(msg.tool_calls) > 0:

# Route to `tools` node for any automated tool calls first.

if any(

tool["name"] in tool_node.tools_by_name.keys() for tool in msg.tool_calls

):

return "tools"

else:

return "ordering"

else:

return "human"The graph now is almost complete. We set up 2 sets of tools corresponding to the nodes under which they operate: automated and ordering.

auto_tools = [get_menu]

tool_node = ToolNode(auto_tools)

order_tools = [add_to_order, confirm_order, get_order, clear_order, place_order, remove_items]

llm_with_tools = llm.bind_tools(auto_tools + order_tools)

graph_builder = StateGraph(OrderState)

# Nodes

graph_builder.add_node("chatbot", chatbot_with_tools)

graph_builder.add_node("human", human_node)

graph_builder.add_node("tools", tool_node)

graph_builder.add_node("ordering", order_node)

# Chatbot -> {ordering, tools, human, END}

graph_builder.add_conditional_edges("chatbot", maybe_route_to_tools)

# Human -> {chatbot, END}

graph_builder.add_conditional_edges("human", maybe_exit_human_node)

# Tools (both kinds) always route back to chat afterwards.

graph_builder.add_edge("tools", "chatbot")

graph_builder.add_edge("ordering", "chatbot")

graph_builder.add_edge(START, "chatbot")

graph_with_order_tools = graph_builder.compile()Demonstration

We are ready to order with the AI-powered barista:

# The default recursion limit for traversing nodes is 25 - setting it higher means you can try a more complex order with multiple steps and round-trips.

config = {"recursion_limit": 150}

state = graph_with_order_tools.invoke({"messages": []}, config)Please observe a demo conversation:

Model: Welcome to the BaristaBot cafe. Type `q` to quit. How may I serve you today?

User: Please give me the menu

Model: Here's our menu! We have a wide variety of coffee and tea drinks, including espresso, Americano, lattes, cappuccinos, and more. We also offer hot chocolate and steamers. Many options can be customized with milk alternatives (whole, 2%, oat, almond, or 2% lactose-free), espresso shots, caffeine levels, and sweeteners. Please let me know what you'd like to order.

User: Im craving an americano

Model: Great choice! Would you like a regular or decaf Americano? And would you like it hot or iced?

User: decaf would be lovely

Model: Perfect. Decaf Americano. Hot or iced?

User: iced please

Model: Okay, one iced decaf Americano. Anything else for you?

User: 1 chololate for my kid

Model: I'm sorry, I didn't find "chololate" on our menu. Do you mean "Hot Chocolate"?

User: yeah that

Model: Okay, one iced decaf Americano and one hot chocolate. Anything else?

User: No, that should be enough

Model: Okay, to confirm your order: One iced decaf Americano and one hot chocolate. Is that correct?

User: Yes

Sending order to kitchen!

1x Iced Decaf Americano (no modifiers)

1x Hot Chocolate (no modifiers)The order state has been captured both in the place_order function and in the final conversational state returned from executing the graph. This illustrates how you can integrate your own systems to a graph app, as well as collect the final results of executing such an app.

[OrderItem(item='Iced Decaf Americano', modifiers=[], quantity=1),

OrderItem(item='Hot Chocolate', modifiers=[], quantity=1)]